Voice recognition systems built into your phone, computer, or other devices, such as Siri, Google Now, Cortana, or Alexa, can respond to ultrasonic sounds far above your hearing range. If a computer or smartphone has the voice features activated, the device could secretly be given commands to make phone calls, access malicious websites, or many other vulnerable features without the user being aware.

This could be used for deliberate eavesdropping, surveillance, or other form of espionage attack. If a command was given to dial a specific phone number, for instance, the call could connect and let the adversary listen in all nearby conversations.

Previous research [here] from UC Berkeley and Georgetown U found that Google Now could interpret commands even though the audio had been severely distorted. See previous article Manipulating Phone Commands.

Researchers from Zhejiang University in China designed a completely inaudible attack on speech recognition systems they dubbed “DolphinAttack”. Their system modulates voice commands using ultrasonic frequencies higher than 20 kHz. Their full report is available [here].

DolphinAttack demonstrated by playing the command “Hey Siri” followed by a phone number.

First done audibly then inaudibly using ultrasonic frequencies.

They found that the microphone and audio circuits common in smartphones and computers were still sensitive to these higher frequencies. The audio commands would be successfully interpreted by the speech recognition systems. They tested popular systems including Siri, Google Now, Samsung S Voice, Huawei HiVoice, Microsoft Cortana, and Amazon Echo (Alexa).

Some of the attacks there were able to successfully complete include activating Siri to initiate a Face Time call, Google Now switched a phone into airplane mode, and even manipulated the navigation system in an Audi Q3.

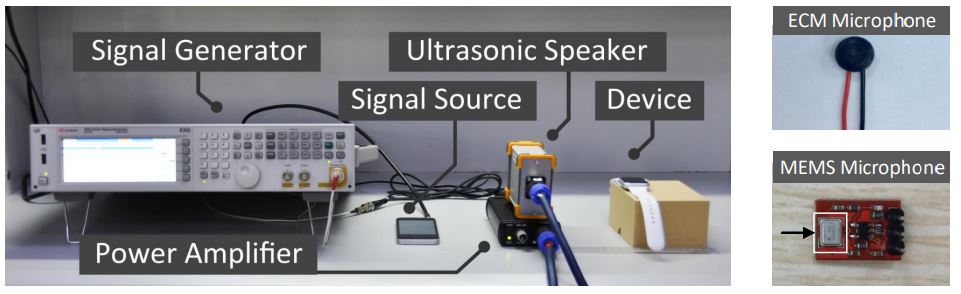

Lab setup for testing ultrasonic audio commands using electret (ECM) and electro-mechanical (MEMS) mics.

[Photo: Guoming Zhange, Chen Yan]

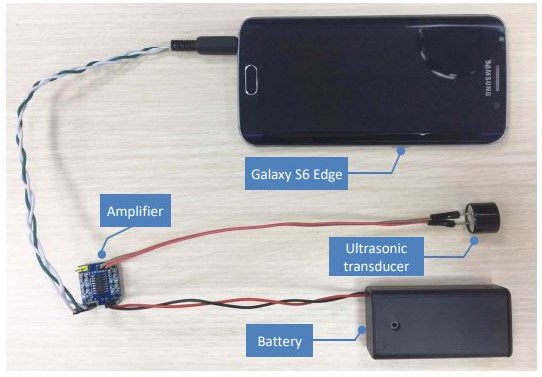

Their tests required that the modulated source be fairly close to the microphone of the device under test, typically from 10 to 160 cm. The lab set up using a higher powered signal source got better distance than the smaller portable system they designed.

Portable setup for ultrasonic audio commands using a Galaxy S6 Edge, and ultrasonic transducer, and a low cost amplifier.

[Photo: Guoming Zhange, Chen Yan]

The researchers did offer a few defenses that seem to fall on the manufacturers, including filtering microphone input to block or suppress ultrasonic frequencies, adding a module that could cancel a baseband part of the modulation, and a software countermeasure that could block commands based on distinctive features of the ultrasonic attack.

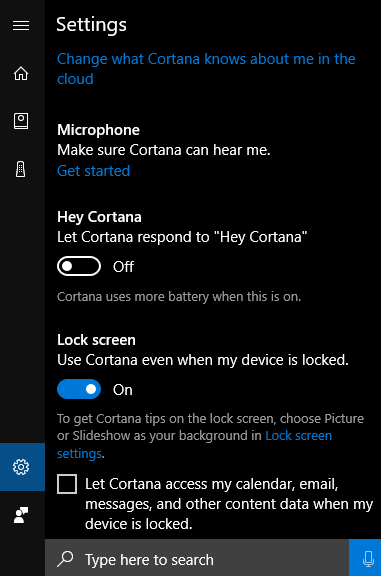

Microsoft Cortana Settings

Simpler countermeasures include disabling voice recognition features of your devices through the standard feature settings. The microphone on many smart phones can also be disabled by plugging in a 3.5mm external mic connector that has had the microphone disconnected or cut off. If you use an external microphone on your computer, you may want to choose one with a physical switch to keep it turned off when not in use.

This may not pose a major threat for most people at this time, due to distance requirements and other limitations. As more and more sensors are introduced into our environment, though, we should be aware of their presence at all times, as well as what information is communicated in their vicinity.

Full PDF:

DolphinAttack: Inaudible Voice Commands

Additional reports can be read at:

http://thehackernews.com/2017/09/ai-digital-voice-assistants.html

https://www.fastcodesign.com/90139019/a-simple-design-flaw-makes-it-astoundingly-easy-to-hack-siri-and-alexa

Zhejiang University researchers include:

Guoming Zhang, Chen Yan, Xiaoyu Ji,Tianchen Zhang, Taimin Zhang, and Wenyuan Xu.