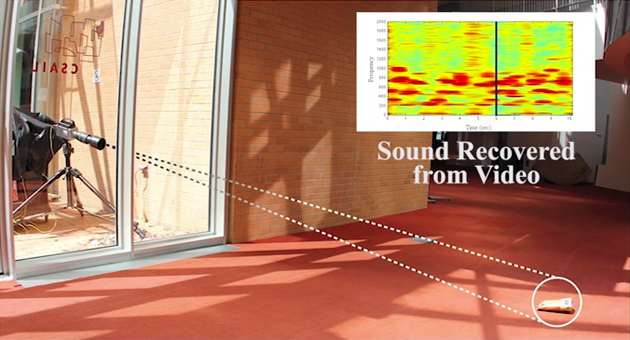

‘We’re recovering sounds from objects,’ said lead researcher Abe Davis in a statement. ‘That gives us a lot of information about the sound that’s going on around the object, but it also gives us a lot of information about the object itself, because different objects are going to respond to sound in different ways.’

Typically this technique requires a high-speed camera recording separate video frames more quickly than the speed of the vibrations, at a rate of thousands of frames per second – much greater than that of ordinary smartphone cameras, for example.

However, the researchers were also able reconstruct a lower-quality audio signal from standard 60 frames per second footage by inferring the missing information. The team claim this could provide a clear-enough signal to indicate the number of speakers being recorded, their gender and possibly even their individual identities.

‘When sound hits an object, it causes the object to vibrate,’ said Davis. ‘The motion of this vibration creates a very subtle visual signal that’s usually invisible to the naked eye. People didn’t realise that this information was there.’

The researchers measured the mechanical properties of the objects they filmed and determined their motions were about a tenth of a micrometer in size, which corresponds to five thousandths of a pixel in a close-up image. But they were able to detect movement in individual pixels by looking at their colour.

A pixel captured at the boundary of an object appears as a mixture of the colours on either side of that boundary. For example, a pixel on the border of a red object against a blue background will appear purple. If the boundary moves slightly then the shade of the pixel will alter according e.g. as it becomes more red or more blue.

Some boundaries in an image are fuzzier than a single pixel in width, however. So the researchers borrowed a technique from earlier work on algorithms that amplify minuscule variations in video to make visible previously undetectable motions: for example, the breathing of a baby filmed in a hospital.

The algorithm uses a series of image filters that separate fluctuations at different boundaries moving in different directions. It then combines this data, giving greater weight to measurements made at very distinct boundaries, to infer the motions of the object as a whole.